AWS Lambda

| Traces | Metrics | App Logs | Custom Logs | Profiling |

|---|---|---|---|---|

| ✅ | ✅ | ✅ | ✅ | ✖ |

Why does Lambda need a special setup?

- Early exits & freezing: Lambda can return and freeze the container quickly, so you need a configuration that flushes spans immediately.

- Layers by language: Auto-instrumentation is delivered via AWS-managed ADOT layers (or community OTel layers) and must be combined with a Collector layer. Order matters.

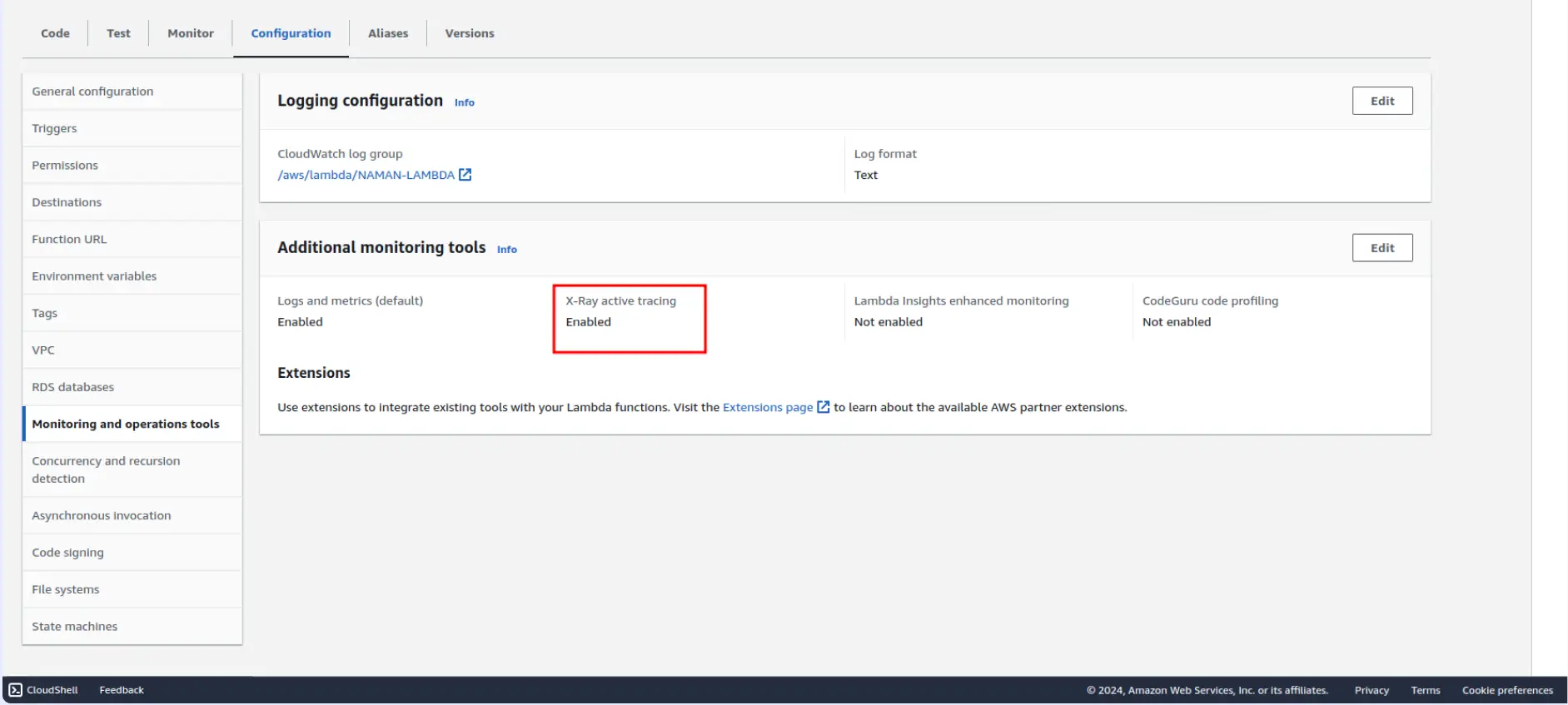

- X-Ray: Enabling Active Tracing gives better service maps and context propagation.

1. Prerequisites

- AWS account with permissions to manage Lambda, layers, and environment variables.

- Your Middleware account key.

- (Optional) Enable Active tracing in Lambda if you also want AWS X-Ray correlation.

Note on ARNs: The OpenTelemetry community layers are regional and published by AWS account 184161586896. Always copy the latest ARNs for your Region and architecture (amd64/arm64) from the official opentelemetry-lambda Releases page.

Example template:

arn:aws:lambda:<region>:184161586896:layer:opentelemetry-nodejs-0_16_0:11 Copy the ARN for the OpenTelemetry (OTel) Collector Lambda Layer:

- Navigate to the OpenTelemetry Collector Lambda Repository and locate the appropriate layer for your environment.

- Copy the Amazon Resource Name (ARN) and change the

<region>and<amd|arm>tags to the region and cpu architecture of your Lambda.arn:aws:lambda:<region>:184161586896:layer:opentelemetry-collector-<amd64|arm64>-0_11_0:1

2 Copy the ARN for the OTel Auto Instrumentation Layer:

- Navigate to the OpenTelemetry Lambda Repository and locate the appropriate layer for your environment.

- Copy the ARN and and change the

<region>tag to the region your Lambda is in.arn:aws:lambda:<region>:184161586896:layer:opentelemetry-nodejs-0_9_0:4

3 In the AWS Console, navigate to Lambda → Functions → your specific Lambda function and create a Layer for each ARN above

The Layers above must be added in order - Collector, then Auto Instrumentation - or they will not work.

4 Review the added layer and ensure it matches the ARN. Finally, click Add to complete the process.

5 Set these environment variables:

If you are using *.mjs / *.ts you may need to set NODE_OPTIONS=--import ./lambda-config.[mjs/ts]

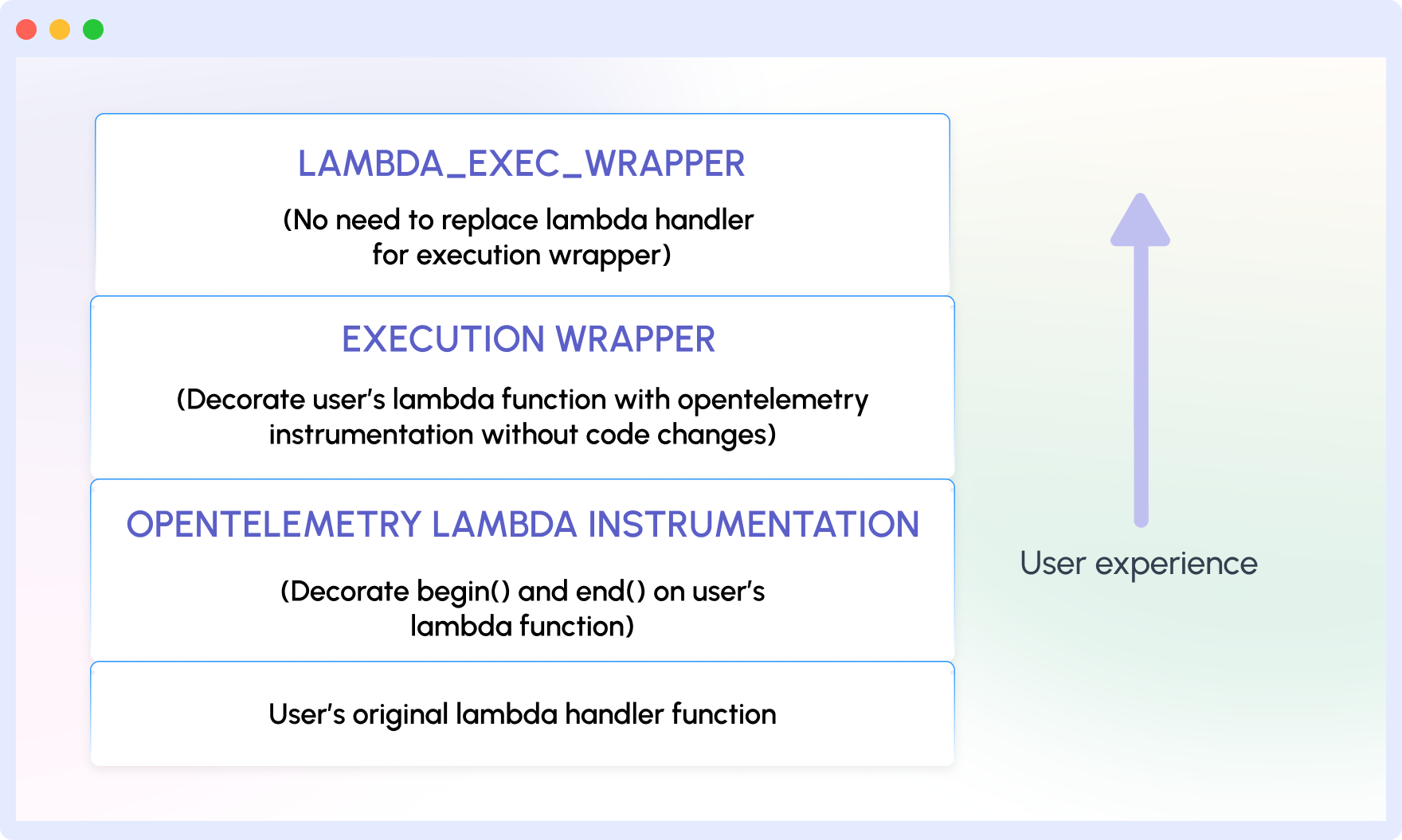

AWS_LAMBDA_EXEC_WRAPPER=/opt/otel-handler

NODE_OPTIONS=--require ./lambda-config.js

OTEL_SERVICE_NAME=your-service-name

OTEL_EXPORTER_OTLP_ENDPOINT=https://<MW_UID>.middleware.io:443

OTEL_RESOURCE_ATTRIBUTES=mw.account_key=<MW_API_KEY>

OTEL_LAMBDA_DISABLE_AWS_CONTEXT_PROPAGATION=true

OTEL_PROPAGATORS=tracecontext6 Create a file named lambda-config.js in your Lambda function's root directory with the following content:

If you are using *.mjs / *.ts you may need to set NODE_OPTIONS=--import ./lambda-config.[mjs/ts]

const { SimpleSpanProcessor } = require("@opentelemetry/sdk-trace-base");

const {

OTLPTraceExporter,

} = require("@opentelemetry/exporter-trace-otlp-proto");

global.configureTracerProvider = (tracerProvider) => {

const spanProcessor = new SimpleSpanProcessor(new OTLPTraceExporter());

tracerProvider.addSpanProcessor(spanProcessor);

};(Optional) Custom spans in code

To capture logic specific to your business, you can use the OpenTelemetry API as follows:

const { trace } = require("@opentelemetry/api");

const { logs, SeverityNumber } = require("@opentelemetry/api-logs");

// Returns a tracer from the global tracer provider

// Use this to create spans

const tracer = trace.getTracer("<MODULE-OR-FILE-NAME>", "1.0.0");

/* Example simple logger */

const logger = {

info: (message, attributes = {}) => {

// Create a log record of type INFO

const logRecord = {

timestamp: Date.now(),

severityText: "INFO",

severityNumber: SeverityNumber.INFO,

body: message,

attributes: {

"deployment.environment": process.env.ENVIRONMENT || "development",

...attributes,

},

};

// Emit the log

logs.getLogger("<MODULE-OR-FILE-NAME>").emit(logRecord);

// Console log in tandem

console.log(message);

},

};

// Lambda handler

exports.handler = async (event, context) => {

// Start a span with the name "lambdaHandler"

const span = tracer.startSpan("lambdaHandler");

try {

// Add a span attribute

span.setAttribute("event", JSON.stringify(event));

// Your Lambda function logic here

const result = "Hello from Lambda!";

// Use the logger created above to emit logs

// Logs will be automatically associated with the spans they are

// emitted in

logger.info("dummy log from aws lambda");

logger.info("dummy log from aws lambda with custom attributes", {

foo: "bar",

});

span.addEvent("Lambda execution completed");

return {

statusCode: 200,

body: JSON.stringify({ message: result }),

};

} catch (error) {

span.recordException(error);

return {

statusCode: 500,

body: JSON.stringify({ error: error.message }),

};

} finally {

span.end();

}

};1 Copy the ARN for the OpenTelemetry (OTel) Collector Lambda Layer:

- Navigate to the OpenTelemetry Collector Lambda Repository and locate the appropriate layer for your environment. Here is a list of Python layers

- Copy the Amazon Resource Name (ARN) and change the

<region>and<amd|arm>tags to the region and CPU architecture of your Lambda.arn:aws:lambda:<region>:184161586896:layer:opentelemetry-collector-<amd64|arm64>-0_13_0:1 - Create a new file named collector.yaml with the following contents, along with your Lambda function code:

receivers: telemetryapi: {} otlp: protocols: grpc: endpoint: "localhost:9319" http: endpoint: "localhost:9320" exporters: debug: verbosity: detailed otlp: endpoint: 'https://your-initial-uid.middleware.io:443' headers: authorization: your-initial-token processors: decouple: {} resource: attributes: - action: upsert key: service.name value: my-service-name service: pipelines: traces: receivers: - telemetryapi - otlp processors: - resource - decouple exporters: - otlp logs: receivers: - telemetryapi - otlp processors: - resource - decouple exporters: - otlp

2 Copy the ARN for the OTel Auto Instrumentation Layer:

- Navigate to the OpenTelemetry Lambda Repository and locate the appropriate layer for your environment.

- Copy the ARN and and change the

<region>tag to the region your Lambda is in.arn:aws:lambda:<region>:184161586896:layer:opentelemetry-python-0_12_0:1 - In the AWS Console navigate to Lambda → Functions → your specific Lambda function and create a Layer for each ARN above.

The Layers above must be added in order - Collector, then Auto Instrumentation - or they will not work.

- Review the added layer and ensure it matches the ARN. Finally, click Add to complete the process.

- Set the following environment variables in your Lambda function configuration:

AWS_LAMBDA_EXEC_WRAPPER=/opt/otel-instrument OPENTELEMETRY_COLLECTOR_CONFIG_URI=/var/task/collector.yaml OPENTELEMETRY_EXTENSION_LOG_LEVEL=info OTEL_BSP_SCHEDULE_DELAY=500 OTEL_EXPORTER_OTLP_ENDPOINT=http://localhost:9320 OTEL_LAMBDA_DISABLE_AWS_CONTEXT_PROPAGATION=true OTEL_PROPAGATORS=tracecontext - Update Log format to

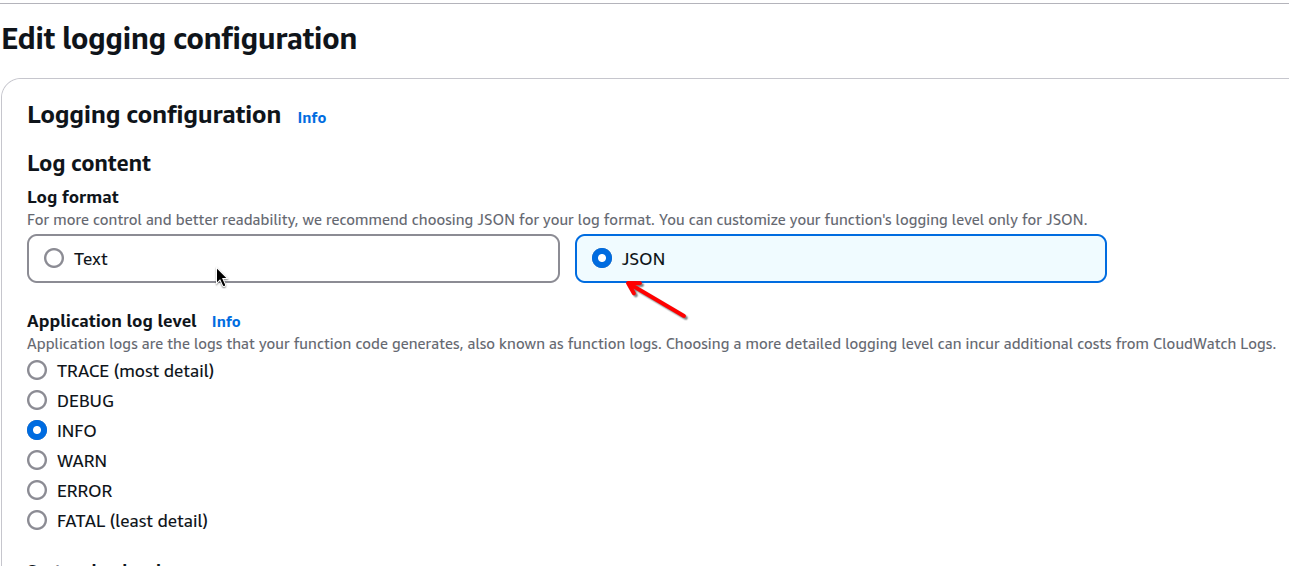

JSONfor your Lambda function by navigating to Lambda → Functions → your specific Lambda function → Configuration → Monitoring and operations tools. Click onEditandset log format to JSON.

- Import and set up a LoggerProvider to send log messages at desired log levels:

import json import logging def lambda_handler(event, context): # Set the LoggerProvider logger1 = logging.getLogger("logger1") logger1.setLevel(logging.DEBUG) logger1.debug("This is a debug message from aws lambda") logger1.info("This is an info message from aws lambda") # Note: This to false or else logs will show up twice in Middleware logger1.propagate = False logging.shutdown() return { 'statusCode': 200, 'body': json.dumps('Hello from Lambda!') }

logger1.propagate must be set to False in your code. Otherwise, logs will show up twice in your Middleware account.

1 Copy the ARN for the OpenTelemetry (OTel) Collector Lambda Layer:

- Navigate to the OpenTelemetry Collector Lambda Repository and locate the appropriate layer for your environment. Here is a list of Java layers

- Copy the Amazon Resource Name (ARN) and change the

<region>and<amd|arm>tags to the region and CPU architecture of your Lambda.arn:aws:lambda:us-east-1:184161586896:layer:opentelemetry-javaagent-0_9_0:1

2 Set the following environment variables in your Lambda function configuration:

AWS_LAMBDA_EXEC_WRAPPER=/opt/otel-handler

OTEL_EXPORTER_OTLP_ENDPOINT=https://<MW_UID>.middleware.io:443

OTEL_LAMBDA_DISABLE_AWS_CONTEXT_PROPAGATION=true

OTEL_PROPAGATORS=tracecontext

OTEL_RESOURCE_ATTRIBUTES=mw.account_key==<YOUR_MW_ACCOUNT_KEY>

OTEL_SERVICE_NAME={JAVA-LAMBDA-SERVICE}3 Add Custom Instrumentation (Optional)

Your Lambda function is automatically instrumented. If you need to add custom spans or attributes, you can use the Opentelemetry API:

Add the following as dependencies:

- Gradle:

plugins { id("java") id("io.opentelemetry.instrumentation.muzzle-check") version "1.28.0" } dependencies { implementation 'io.opentelemetry:opentelemetry-api:1.28.0' implementation 'com.amazonaws:aws-lambda-java-core:1.2.1' } repositories { mavenCentral() } - Maven:

<dependencies> <!-- OpenTelemetry Dependencies --> <dependency> <groupId>io.opentelemetry</groupId> <artifactId>opentelemetry-api</artifactId> <version>1.28.0</version> </dependency> <!-- AWS Lambda Dependencies --> <dependency> <groupId>com.amazonaws</groupId> <artifactId>aws-lambda-java-core</artifactId> <version>1.2.1</version> </dependency> </dependencies>

These dependencies add the OpenTelemetry API (for optional manual spans in your handler) and the AWS Lambda core interfaces (RequestHandler, Context). The ADOT Java agent layer handles auto-instrumentation and exporting, so you don’t need extra exporter SDKs here. Keep the opentelemetry-api version compatible with your Lambda runtime and the ADOT agent layer in use.

The goal is instrument your Go Lambda with the official OTel Go wrapper and send traces directly to Middleware over OTLP/HTTP (no Lambda layer required for Go).

1 Install / import the required packages

Add these imports at the top of your Lambda project’s entry point:

import (

"context"

"log"

"github.com/aws/aws-lambda-go/lambda"

"go.opentelemetry.io/contrib/instrumentation/github.com/aws/aws-lambda-go/otellambda"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/attribute"

"go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracehttp"

"go.opentelemetry.io/otel/sdk/resource"

"go.opentelemetry.io/otel/sdk/trace"

)Here,

otellambda.InstrumentHandleris the official wrapper for Go Lambdas.- The HTTP OTLP exporter (

otlptracehttp) is the correct exporter for sending spans via OTLP/HTTP.

2 Configure your Middleware account and tracer provider

Create a helper that builds a tracer provider and points the exporter directly to Middleware’s OTLP endpoint.

Important fix: with the HTTP exporter, use WithEndpointURL and include the full /v1/traces URL (or use the env var OTEL_EXPORTER_OTLP_TRACES_ENDPOINT).

func initTracerProvider(ctx context.Context) (*trace.TracerProvider, error) {

// Export spans directly to Middleware (OTLP/HTTP).

// WithEndpointURL expects a full URL; include /v1/traces.

exp, err := otlptracehttp.New(ctx,

otlptracehttp.WithEndpointURL("https://ruplp.middleware.io/v1/traces"),

)

if err != nil {

return nil, err

}

// Add useful resource attributes (seen in Middleware APM service list/filters)

res := resource.NewSchemaless(

attribute.String("service.name", "{GO-LAMBDA-SERVICE}"),

attribute.String("mw.account_key", "<YOUR_MW_ACCOUNT_KEY>"),

attribute.String("library.language", "go"),

)

tp := trace.NewTracerProvider(

trace.WithBatcher(exp),

trace.WithResource(res),

)

otel.SetTracerProvider(tp)

return tp, nil

}Here,

WithEndpointURL(or env vars) is the recommended way to set an HTTPS URL for the HTTP exporter. UsingWithEndpoint("host:port")is meant for host:port only and does not take a scheme/path.- Alternative (env only): set

OTEL_EXPORTER_OTLP_TRACES_ENDPOINT=https://ruplp.middleware.io/v1/tracesand callotlptracehttp.New(ctx)with no options. The exporter reads the env var.

3 Add your handler (custom attributes optional)

type MyEvent struct {

Name string `json:"name"`

Age int `json:"age"`

}

type MyResponse struct {

Message string `json:"message"`

}

func HandleLambdaEvent(ctx context.Context, event *MyEvent) (*MyResponse, error) {

tracer := otel.Tracer("lambda")

_, span := tracer.Start(ctx, "HandleLambdaEvent")

defer span.End()

// Add any domain attributes you want to see in traces

span.SetAttributes(

attribute.String("user.name", event.Name),

attribute.Int("user.age", event.Age),

)

return &MyResponse{Message: "ok"}, nil

}4 Wire the wrapper in main and ensure flush

func main() {

ctx := context.Background()

tp, err := initTracerProvider(ctx)

if err != nil {

log.Fatalf("failed to init tracer provider: %v", err)

}

defer tp.Shutdown(ctx) // flush on shutdown

// Wrap your handler so spans are started/ended around each invocation.

// WithFlusher ensures batches flush before the runtime freezes.

lambda.Start(otellambda.InstrumentHandler(

HandleLambdaEvent,

otellambda.WithFlusher(tp),

))

}InstrumentHandler is the supported wrapper; WithFlusher is designed for Lambda’s short-lived execution model and ensures spans are exported before the runtime freezes.

1 First, ensure that you have .NET 6 or later on your build machine. Verify with:

dotnet --version2 Add the Middleware agent package to your project:

dotnet add package MW.APM3 Add the following to your Program.cs. This wires MW.APM using the .NET 6 minimal hosting pattern and sets up console logging for quick validation.

var builder = WebApplication.CreateBuilder(args);

var configuration = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json", optional: false, reloadOnChange: true)

.AddEnvironmentVariables()

.Build();

builder.Services.ConfigureMWInstrumentation(configuration);

builder.Logging.AddConfiguration(configuration.GetSection("Logging"));

builder.Logging.AddConsole();

builder.Logging.SetMinimumLevel(LogLevel.Debug);

// Build the app

var app = builder.Build();

// Initialize MW.APM logger AFTER app is built

Logger.Init(app.Services.GetRequiredService<ILoggerFactory>());

// Define endpoints / handlers as needed

// app.MapGet("/", () => "OK");

app.Run();4 Add your account key, target URL, and service name to appsettings.json:

{

"MW": {

"ApiKey": "<YOUR_MW_ACCOUNT_KEY>",

"TargetURL": "<MW_UID>.middleware.io:443",

"ServiceName": "{SERVICE NAME}"

},

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft.AspNetCore": "Warning"

}

}

}Verify Installation

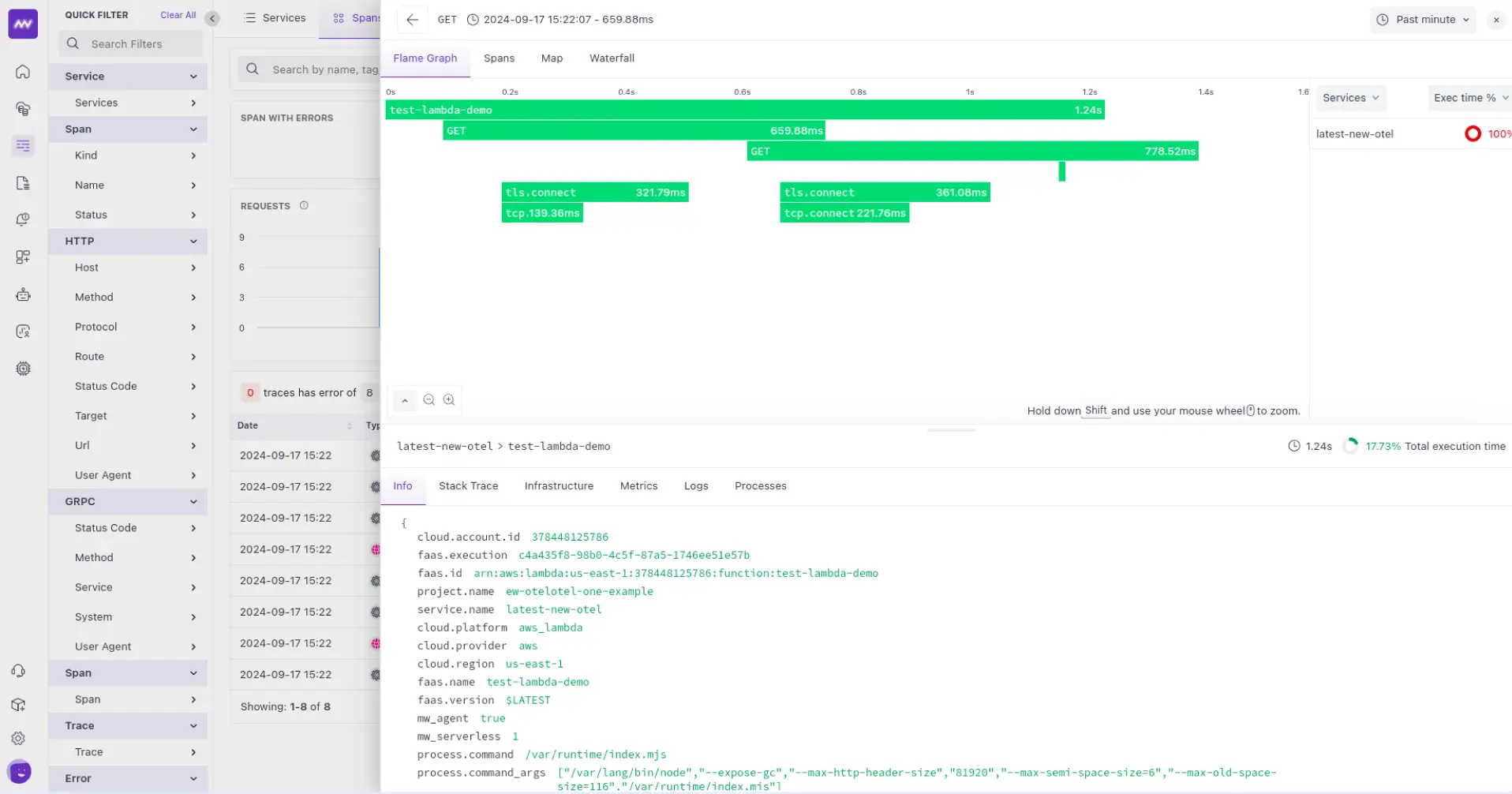

To deploy and test your application, check your Middleware account for traces by navigating to APM → Services and finding the name of your function in the services list.

X-Ray Tracing

Ensure X-Ray tracing is enabled for your Lambda function to get the full benefit of the instrumentation.

Here’s a single, copy-friendly AWS Lambda – Environment Variables (Quick Reference) table (no extras, just the vars).

| Variable | Purpose | Example value |

|---|---|---|

AWS_LAMBDA_EXEC_WRAPPER | Starts auto-instrumentation before your handler | Node/Java: /opt/otel-handler • Python: /opt/otel-instrument |

OTEL_SERVICE_NAME | Service name shown in Middleware | {NODEJS-LAMBDA-SERVICE} / {PYTHON-LAMBDA-SERVICE} / {JAVA-LAMBDA-SERVICE} |

OTEL_PROPAGATORS | Context propagation format | tracecontext |

OTEL_RESOURCE_ATTRIBUTES | Resource metadata (incl. Middleware key) | mw.account_key=<YOUR_MW_ACCOUNT_KEY> |

OTEL_LAMBDA_DISABLE_AWS_CONTEXT_PROPAGATION | Disable AWS/X-Ray propagation if desired | true |

NODE_OPTIONS | Preload bootstrap file (Node) | --require ./lambda-config.js |

OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED | Auto-capture Python logs | true |

OTEL_LOGS_EXPORTER | Export logs via OTLP | otlp |

OTEL_BSP_SCHEDULE_DELAY | Span batcher flush cadence (ms) | 500 |

OTEL_EXPORTER_OTLP_ENDPOINT | Direct export base endpoint | https://ruplp.middleware.io |

OTEL_EXPORTER_OTLP_TRACES_ENDPOINT | Direct export (traces) full URL (HTTP) | https://ruplp.middleware.io/v1/traces |

OTEL_EXPORTER_OTLP_METRICS_ENDPOINT | Direct export (metrics) full URL (HTTP) | https://ruplp.middleware.io/v1/metrics |

OTEL_EXPORTER_OTLP_LOGS_ENDPOINT | Direct export (logs) full URL (HTTP) | https://ruplp.middleware.io/v1/logs |

OPENTELEMETRY_COLLECTOR_CONFIG_URI | If using the Collector layer, where it reads config | /var/task/collector.yaml (or s3://…, https://…) |

OPENTELEMETRY_EXTENSION_LOG_LEVEL | Collector extension log level | info / debug |

Troubleshooting

1) “I don’t see any traces in Middleware”

Likely causes

- The Lambda wrapper isn’t running (Node/Java/Python only).

- You’re exporting to the wrong endpoint or missing the /v1/traces path for OTLP/HTTP (common in Go).

- The Collector layer (if used) isn’t reading your config.

- The function has no internet egress (VPC without NAT).

What to check

- Wrappers: In Node, Java, Python, confirm the wrapper env var is set exactly:

- Node/Java:

AWS_LAMBDA_EXEC_WRAPPER=/opt/otel-handler - Python:

AWS_LAMBDA_EXEC_WRAPPER=/opt/otel-instrument

- Node/Java:

- Endpoints: For OTLP/HTTP, prefer the per-signal env var with the full path (or exporter option). Example:

OTEL_EXPORTER_OTLP_TRACES_ENDPOINT=https://ruplp.middleware.io/v1/traces(Go/HTTP exporters need the/v1/tracessuffix).

- Collector (if using the Lambda Collector layer): package a config and point the extension at it:

- Add

collector.yamlto your deployment. - Set

OPENTELEMETRY_COLLECTOR_CONFIG_URI=/var/task/collector.yaml. - In the file, export to Middleware via OTLP/HTTP.

- Add

- Networking/Egress: If your function runs in a VPC, ensure it has internet access (NAT Gateway / routing) so it can reach

https://ruplp.middleware.io. - Logs: Open CloudWatch Logs for the function to see wrapper/exporter/extension messages (Monitor → View CloudWatch logs).

2) “Traces appear sporadically or late”

Likely causes

- The BatchSpanProcessor is batching too long for short Lambda runs.

- The runtime is freezing before spans flush.

What to try

- Reduce the batcher delay for short invocations:

OTEL_BSP_SCHEDULE_DELAY=500(ms) (tune with BSP env vars).

- Go: wrap your handler with the Lambda wrapper and force a flush at the end:

lambda.Start(otellambda.InstrumentHandler(Handle, otellambda.WithFlusher(tp))).

3) “Node.js: the wrapper runs, but my bootstrap file doesn’t load”

Likely cause

- Using

NODE_OPTIONS=--require ./lambda-config.jswith an ESM (module) project.

Fix

- For ESM projects, use

--import/aloader instead of--require(which is for CommonJS).

4) “Python: logs aren’t showing up”

Likely cause

- Auto-logging isn’t enabled for the Python OTel SDK.

Fix

- Add:

OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED=trueOTEL_LOGS_EXPORTER=otlp(if exporting logs) These are standard OTel SDK knobs for Python logging & exporters.

5) “Using the Collector layer: I still see data going to X-Ray, not Middleware”

Likely causes

- The extension is not reading your config file, or your exporter block is missing/incorrect.

Fix

- Ensure

OPENTELEMETRY_COLLECTOR_CONFIG_URIpoints to a file you ship (e.g.,/var/task/collector.yaml). - In that file, define an OTLP/HTTP exporter with endpoint:

https://ruplp.middleware.ioand a traces pipeline that uses it. - Check the extension logs in CloudWatch to confirm it loaded your file.

6) “Java/Node/Python: wrapper set, still no traces”

Likely causes

- Wrong layer ARN (Region/architecture mismatch) or outdated layer.

OTEL_SERVICE_NAME/mw.account_keynot set.

Fix

- Re-add the correct community OTel Lambda layer ARN for your Region/arch from the latest releases (publisher 184161586896).

- Set

OTEL_SERVICE_NAMEand include your Middleware key viaOTEL_RESOURCE_ATTRIBUTES=mw.account_key=<KEY>.

7) “Go: exporter configured, but nothing arrives”

Likely causes

- Using

OTEL_EXPORTER_OTLP_ENDPOINTwithout a per-signal endpoint/path for HTTP. - Missing TLS/URL settings, or not batching/flushing.

Fix

- Prefer

OTEL_EXPORTER_OTLP_TRACES_ENDPOINT=https://ruplp.middleware.io/v1/tracesor in code useotlptracehttp.WithEndpointURL("https://ruplp.middleware.io/v1/traces"). - Keep

WithFlusher.

8) “Function times out or gets killed before export”

Likely causes

- Tight timeout/memory + long batch interval.

- Exporter retries blocked by no egress.

Fix

- Increase Lambda timeout slightly and reduce BSP delay (e.g.,

OTEL_BSP_SCHEDULE_DELAY=500). - Verify VPC egress (NAT).

9) “Spans don’t link across services”

Likely cause

- Mixed propagation formats (AWS vs W3C).

Fix

- Set

OTEL_PROPAGATORS=tracecontextacross functions to keep W3C propagation consistent. (If you need AWS/X-Ray interop, don’t disable AWS propagation.)

Need assistance or want to learn more about Middleware? Contact our support team at [email protected] or join our Slack channel.