LLM Observability- Overview

What is LLM Observability?

LLM Observability in Middleware gives you end-to-end visibility into how your LLM-powered features behave in the real world. You get traces, metrics, and dashboards in one place, so you can understand behavior, troubleshoot faster, and tune for performance and cost efficiency.

What you get

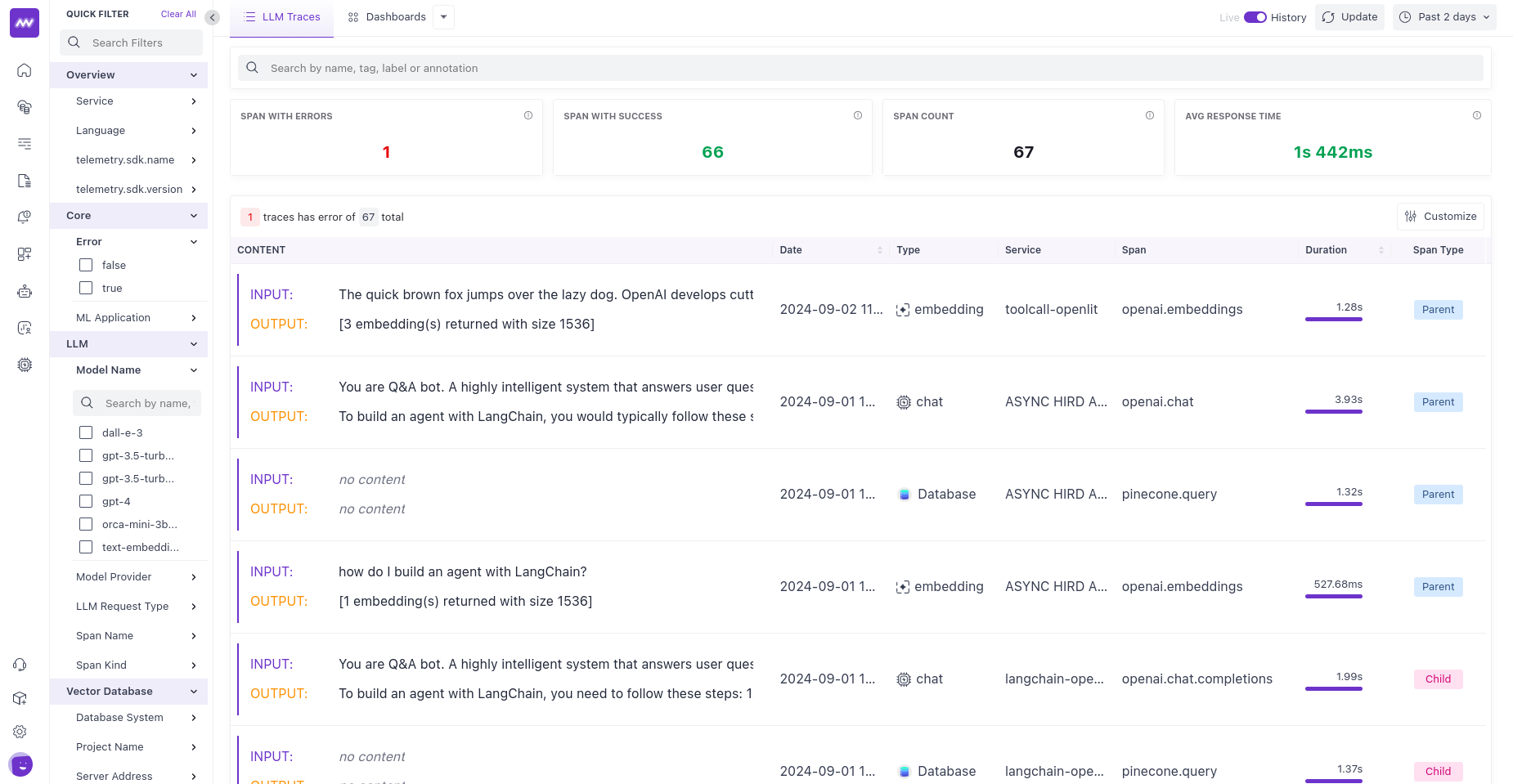

Traces

End-to-end tracing across your LLM requests and workflows, so you can see the path a request takes, where time is spent, and what’s downstream of each call.

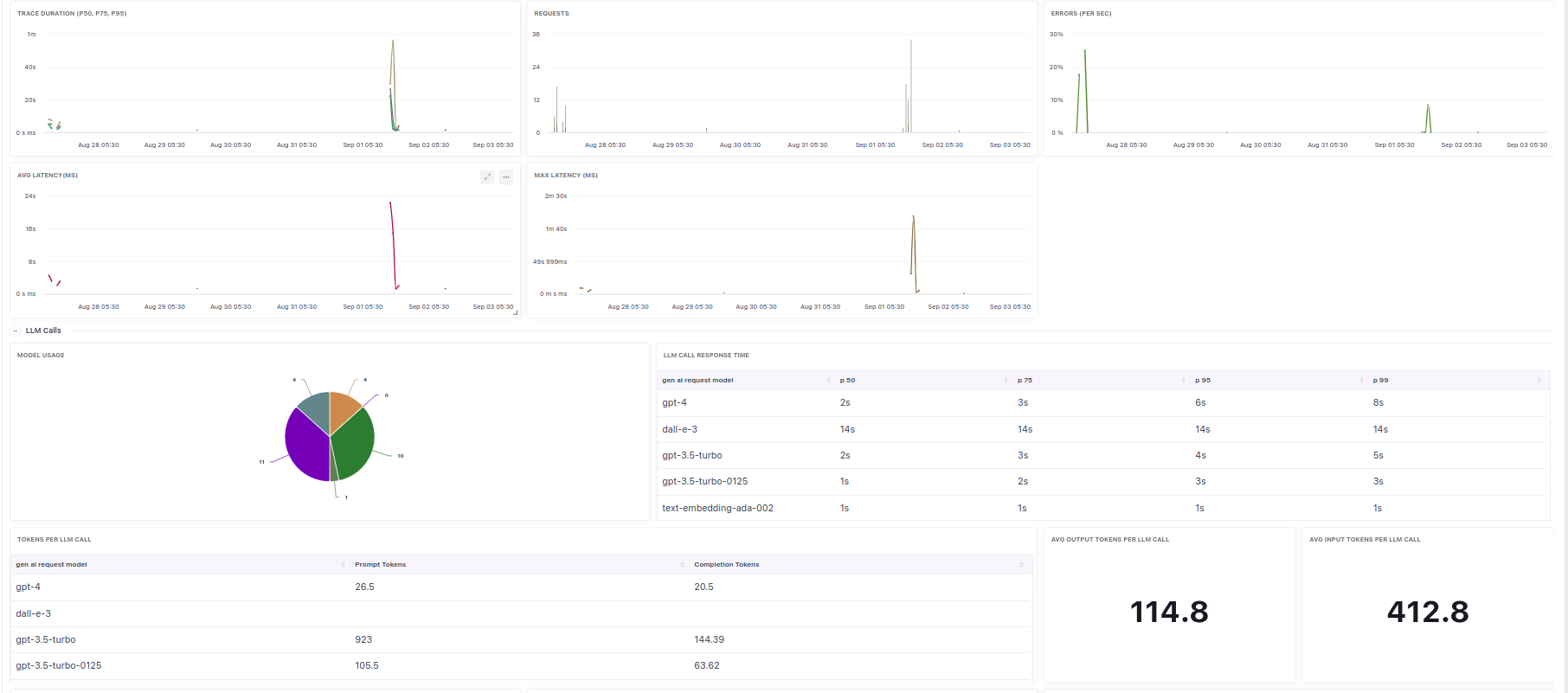

Metrics

Capture LLM-specific metrics exposed by the SDKs you integrate, enabling trend analysis and alerting on key signals for your use cases.

Dashboards

Pre-built views that surface the essentials quickly, helping you monitor health at a glance and then drill down via traces when something unusual appears.

Tip: Use dashboards for the “what” and traces for the “why.” Start with the dashboard overview, then jump into a trace to pinpoint the slow span or failing dependency.

Benefits:

- Enhanced visibility into behaviour and performance

- Faster troubleshooting with trace-level detail

- Performance optimisation guided by real usage data

- Seamless integration with popular LLM frameworks/providers via supported SDKs

These benefits are available out of the box once your app is instrumented and sending data to Middleware.

Supported SDKs

Middleware supports two OpenTelemetry-compatible SDKs for LLM Observability:

- Traceloop

- OpenLIT

Both extend OpenTelemetry to capture LLM-specific data. Choose one based on your stack and provider coverage (see comparison below).

Traceloop vs OpenLIT: Compatibility Matrix

Both Traceloop and OpenLIT support a wide range of LLM providers, vector databases, and frameworks. For the most up-to-date compatibility information:

- Traceloop: See Traceloop supported integrations

- OpenLIT: See OpenLIT supported integrations

These pages are maintained by the SDK authors and reflect the latest provider and framework support.

Quick Start

- Pick an SDK: Choose Traceloop or OpenLIT based on the matrix above and your stack.

- Instrument your application: Follow the SDK-specific guide to install, initialise, and configure the client to send data to your Middleware instance. (Examples and parameters are shown in each SDK page.)

- Send data to Middleware: Configure the SDK to point to your Middleware endpoint and include the required authentication headers, as shown in the guide.

- Verify in the UI: Open the LLM Observability section and confirm that traces and metrics are visible on the dashboards. Drill into traces to verify that spans and attributes appear correct.

Choosing the right SDK (rules of thumb)

- Need Gemini, Watsonx, Together, or Aleph Alpha: Prefer Traceloop.

- Need GPT4All, Groq, or ElevenLabs: Prefer OpenLIT.

- Using mainstream providers (OpenAI, Azure OpenAI, Anthropic, Cohere, Bedrock, Mistral, Vertex, Hugging Face) and common vector DBs: Either SDK works; choose based on team familiarity.

Common Pitfalls (and how to avoid them)

- No data in the UI: Double-check the SDK is initialised with the correct

api_endpoint/authheaders and that your app actually triggers LLM calls in an environment that can reach Middleware. - Partial traces: If you’re using a supported framework, ensure auto-instrumentation is enabled; otherwise, add the minimal annotations recommended by the SDK guide.

- Mismatched provider: Verify your chosen SDK supports your exact provider/database before rollout (see matrix).

Need assistance or want to learn more about Middleware? Contact our support team at [email protected] or join our Slack channel.