Kubernetes Auto-instrumentation

Automated instrumentation lets you collect end‑to‑end traces from your services without modifying application code. Middleware.io’s auto‑instrumentation is built on the OpenTelemetry Kubernetes Operator, combined with a language‑detection webhook and mutating injector, to deliver in‑cluster, zero‑downtime instrumentation for Java, .NET, Node.js, Python, and Go workloads.

Why this matters

- No code changes – Deploy once, get traces immediately.

- Centralized control – Toggle instrumentation per namespace or app via UI or CR.

- Dynamic updates – Changes apply on the fly; just restart your pods.

Prerequisites

1 Kubernetes 1.21+

kubectl version

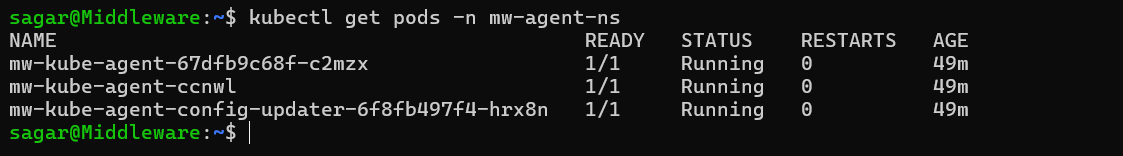

2 Middleware Kubernetes Agent installed and running in mw-agent-ns.

kubectl get pods -n mw-agent-ns

3 Helm 3.5+ (for Helm method) or bash + curl (for script method).

4 cert‑manager v1.14+ (unless you have alternate cert issuance).

5 Middleware API Key with APM permissions.

Installation Methods

Auto‑Instrumentation can be installed either via Helm or a single‑command script. Both approaches deploy the OpenTelemetry Operator, Language Detector, Mutating Webhook, and a default Instrumentation CR named mw-autoinstrumentation in the mw-agent-ns namespace.

Install cert‑manager (if not already present):

helm repo add jetstack https://charts.jetstack.io helm repo update helm install cert-manager jetstack/cert-manager \ --namespace cert-manager --create-namespace \ --version v1.14.5 --set installCRDs=trueAdd the Middleware Helm repo and install the auto‑instrumentation chart:

helm repo add middleware-labs https://helm.middleware.io

helm repo update

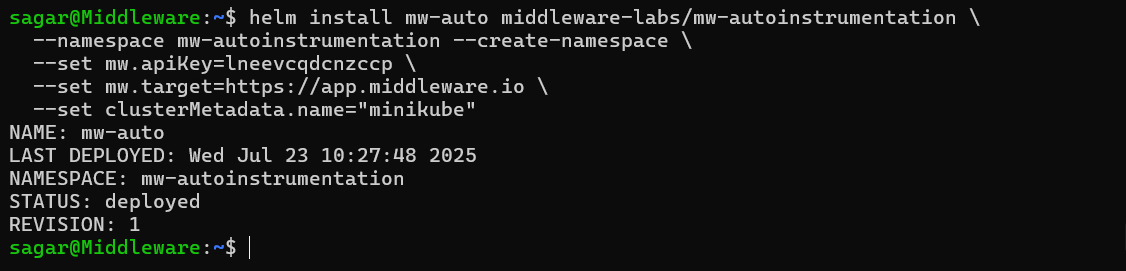

helm install mw-auto middleware-labs/mw-autoinstrumentation \

--namespace mw-autoinstrumentation --create-namespace \

--set mw.apiKey=<MW_API_KEY> \

--set mw.target=https://app.middleware.io \

--set clusterMetadata.name=<YOUR_CLUSTER_NAME>The above set of commands deploys the OpenTelemetry Operator, Language Detector, Webhook, and default Instrumentation CR.

For a one‑liner approach, run:

MW_INSTALL_CERT_MANAGER=true \ MW_API_KEY="<YOUR_MW_API_KEY>" \ MW_TARGET="https://app.middleware.io" \ MW_CLUSTER_NAME="prod-cluster" \ bash -c "$(curl -L https://install.middleware.io/scripts/mw-kube-auto-instrumentation-install.sh)"Control namespace scope via environment variables:

# Only instrument 'app' and 'backend' namespaces MW_INCLUDED_NAMESPACES="app,backend" \ # Instrument all except 'monitoring' and 'infra' namespaces MW_EXCLUDED_NAMESPACES="monitoring,infra" \

Configuring Auto‑Instrumentation

UI‑Driven Application Selection

- Navigate to Kubernetes Agent ➔ Auto‑Instrumentation in the Middleware UI.

- Review the list of detected Deployments, StatefulSets, and DaemonSets, along with their inferred language and current status.

- Toggle instrumentation per‐workload or click Select All and then Save Changes.

- Restart your applications for changes to apply:

kubectl rollout restart deployment/<deployment-name> -n <namespace>

Manual Custom Configuration

When to Use Manual Configuration

Use manual configuration in these scenarios:

- Language detection isn’t working for your application.

- You need custom instrumentation settings, such as non‑default sampling or exporter options.

- Your application has special requirements, like custom resource attributes or propagators.

- You want fine‑grained control over which pods get instrumented and how.

Before you begin: Disable auto‑instrumentation for the target application via the UI to avoid conflicts.

In order to auto‑instrument your applications, the OTel Kubernetes Operator must know which Pods to instrument and which Instrumentation Custom Resource (CR) to apply to those Pods. The installation steps in the Installation section automatically create a default Instrumentation CR named mw-autoinstrumentation in the mw-agent-ns namespace.

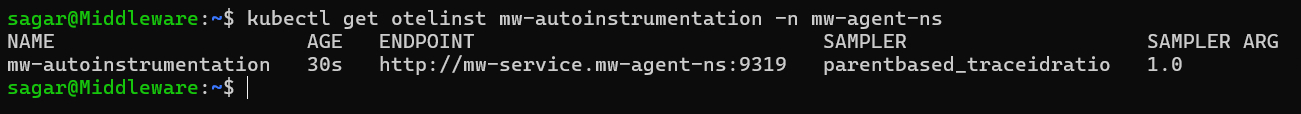

To confirm that the default Instrumentation CR is present, run:

kubectl get otelinst mw-autoinstrumentation -n mw-agent-ns

To inspect the full instrumentation CR, use kubectl get with the -o yaml flag in the namespace where it lives. For example, if it’s in mw-autoinstrumentation:

kubectl get otelinst mw-autoinstrumentation -n mw-autoinstrumentation -o yamlThe Instrumentation CR looks like below

apiVersion: opentelemetry.io/v1alpha1

kind: Instrumentation

metadata:

name: mw-autoinstrumentation

namespace: mw-agent-ns

spec:

exporter:

endpoint: http://mw-service.mw-agent-ns:9319

propagators:

- tracecontext

- baggage

- b3

sampler:

type: parentbased_traceidratio

argument: "1.0"

python:

env:

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: http://mw-service.mw-agent-ns:9320

- name: OTEL_LOGS_EXPORTER

value: otlp_proto_http

- name: OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED

value: "true"

go:

env:

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: http://mw-service.mw-agent-ns:9320

dotnet:

env:

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: http://mw-service.mw-agent-ns:9320

java:

env:

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: http://mw-service.mw-agent-ns:9319The exporter.endpoint field in your Instrumentation CR tells your instrumented pods where to send traces. It should point to the Middleware Kubernetes Agent service, using:

- Port 9319 for gRPC (OTLP/gRPC)

- Port 9320 for HTTP (OTLP/HTTP)

Where to put your annotations To enable auto‑instrumentation, you add language‑specific annotations inside each Pod template, under:

spec:

template:

metadata:

annotations:

<your-injection-annotation>: "<namespace>/<cr-name>"Do not place these annotations in the top‑level metadata: of your Deployment, DaemonSet, or StatefulSet; only in the Pod spec.

Supported Languages & Annotations

Add these annotations under spec.template.metadata.annotations of your Pod template:

Java

instrumentation.opentelemetry.io/inject-java: "mw-autoinstrumentation/mw-autoinstrumentation"Node.js

instrumentation.opentelemetry.io/inject-nodejs: "mw-autoinstrumentation/mw-autoinstrumentation"Python

instrumentation.opentelemetry.io/inject-python: "mw-autoinstrumentation/mw-autoinstrumentation".NET (glibc)

instrumentation.opentelemetry.io/inject-dotnet: "mw-autoinstrumentation/mw-autoinstrumentation" instrumentation.opentelemetry.io/otel-dotnet-auto-runtime: "linux-x64".NET (musl)

instrumentation.opentelemetry.io/otel-dotnet-auto-runtime: "linux-musl-x64"Go To auto‑instrument a Go application, you must tell the injector which executable to attach to and grant it the necessary permissions. Use the exact annotations and securityContext shown below.

- Pod Annotations Add these under

spec.template.metadata.annotations(replace as needed):instrumentation.opentelemetry.io/inject-go: "mw-agent-ns/mw-autoinstrumentation" instrumentation.opentelemetry.io/otel-go-auto-target-exe: "/path/to/executable" - Container Security Context Within the same Pod spec, ensure your container has elevated privileges so the auto‑instrumentation helper can attach via

ptrace:securityContext: privileged: true runAsUser: 0

How it works: The Go auto‑instrumentation deploys a helper binary alongside your main process. It uses low‑level system calls to hook into your running executable (specified by

otel-go-auto-target-exe). Running asrootwithprivileged: trueis required for these calls to succeed. Once applied and your Pod restarts, traces from your Go application will flow into Middleware.io automatically.- Pod Annotations Add these under

Resource Attributes

If you want to add resource attributes to the traces generated by auto‑instrumentation, add annotations in the following format to your Pod specification (under spec.template.metadata.annotations):

resource.opentelemetry.io/your-key: "your-value"your-keyand"your-value"are the resource attribute’s key and value.- You can add multiple attributes by listing multiple

resource.opentelemetry.io/<key>: "<value>"annotations.

Advanced Configuration

By default, all language‑injection annotations use the value mw-agent-ns/mw-autoinstrumentation, because the OTel Kubernetes Operator (installed via Middleware’s instructions) creates an Instrumentation CR named mw-autoinstrumentation in the mw-agent-ns namespace.

You can also create your own Instrumentation CR in any namespace and reference it in your annotations. Below are all valid values for the injection annotation (replace <lang-annotation> with the appropriate key, e.g. instrumentation.opentelemetry.io/inject-java):

# 1. Use the one CR in the Pod's namespace

<lang-annotation>: "true"

# 2. Use the CR named "my-instrumentation" in the current namespace

<lang-annotation>: "my-instrumentation"

# 3. Use the CR named "my-instrumentation" from namespace "my-other-namespace"

<lang-annotation>: "my-other-namespace/my-instrumentation"

# 4. Disable instrumentation for this Pod

<lang-annotation>: "false"Below are all the possible values for the auto-instrumentation annotation:

"true":Inject the singleInstrumentationCR present in the Pod’s namespace. The behavior could be unpredictable if you have multiple Instrumentation CRs defined in the current namespace."my-instrumentation":Inject the CR namedmy-instrumentationin the same namespace."my-other-namespace/my-instrumentation": Inject the CR namedmy-instrumentationfrommy-other-namespace. This is the option used in all our examples above with Instrumentation CR name “mw-otel-auto-instrumentation” and namespacemw-agent."false":Do not inject any CR (temporarily disable instrumentation).

When using Pod‑based workloads (Deployments, StatefulSets, DaemonSets), make sure these annotations appear under the Pod template section (spec.template.metadata.annotations), not under the workload’s top‑level metadata.annotations.

Explore Your Data on Middleware

Once you’ve installed auto‑instrumentation and restarted your Pods, new trace data will flow into Middleware automatically as your applications handle requests. To view and analyze this data:

- Log in to your Middleware.io account.

- Navigate to APM in the sidebar.

- Select your service from the list (e.g.,

test-node-app). - Explore Service Maps, Trace Details, Latency Charts, and Error Rates for real‑time insights into your application’s performance.

Uninstall

You have two options for removing auto‑instrumentation from your cluster.

1. Uninstall both cert‑manager and Middleware Auto‑Instrumentation

This will remove the mw-auto release (OTel Operator, Language Detector, Webhook, etc.) and the cert‑manager chart.

helm uninstall mw-auto --namespace mw-autoinstrumentation

helm uninstall cert-manager --namespace cert-manager2. Uninstall only Middleware Auto‑Instrumentation

If you want to keep cert‑manager in place (for other webhooks), remove just the mw-auto release:

helm uninstall mw-auto --namespace mw-autoinstrumentationTroubleshooting

Google Kubernetes Engine (Private Cluster)

In a GKE private cluster, the control plane cannot reach your worker nodes on port 9443 by default. To fix this, allow the control‑plane CIDR range access:

Find the control‑plane CIDR block

gcloud container clusters describe <CLUSTER_NAME> \

--region <REGION> \

--format="value(privateClusterConfig.masterIpv4CidrBlock)"Example:

gcloud container clusters describe demo-cluster --region us-central1-c \

--format="value(privateClusterConfig.masterIpv4CidrBlock)"Output:

172.16.0.0/28Then you can add a firewall rule to allow ingress from this IP range and TCP port 9443 using the command below:

gcloud compute firewall-rules create cert-manager-9443 \

--source-ranges <GKE_CONTROL_PLANE_CIDR> \

--target-tags ${GKE_CONTROL_PLANE_TAG} \

--allow TCP:9443For more details, see the GKE firewall documentation.

Applications Not Detected

- Verify your application uses a supported language (Java, Node.js, Python, .NET, Go).

- For multi‑container Pods, ensure your main app container is listed first under

spec.containers. - Check that your application starts correctly and remains in

Runningstate. - Inspect the Language Detector logs in the

mw-autoinstrumentationnamespace for errors:kubectl logs deploy/language-detector -n mw-autoinstrumentation - If detection still fails, restart the detector:

kubectl rollout restart deployment/language-detector -n mw-autoinstrumentation

Instrumentation Not Working

- Confirm the namespace is included in your auto‑instrumentation scope.

- Ensure your application Pods were restarted after enabling instrumentation.

- Check your annotations under

spec.template.metadata.annotationsfor typos. - Review admission webhook errors and component logs in

mw-autoinstrumentation:kubectl logs deploy/auto-injector-webhook -n mw-autoinstrumentation - Verify network connectivity from your Pods to the Middleware Agent service (

mw-service.mw-agent-ns:9319 or 9320). - Inspect the OpenTelemetry Operator logs for reconciliation issues:

kubectl logs deploy/otel-operator -n mw-autoinstrumentation

If problems persist after these checks, please reach out to Middleware support with your logs and configuration details.