Log Ingestion Control (Hosts & Kubernetes Clusters)

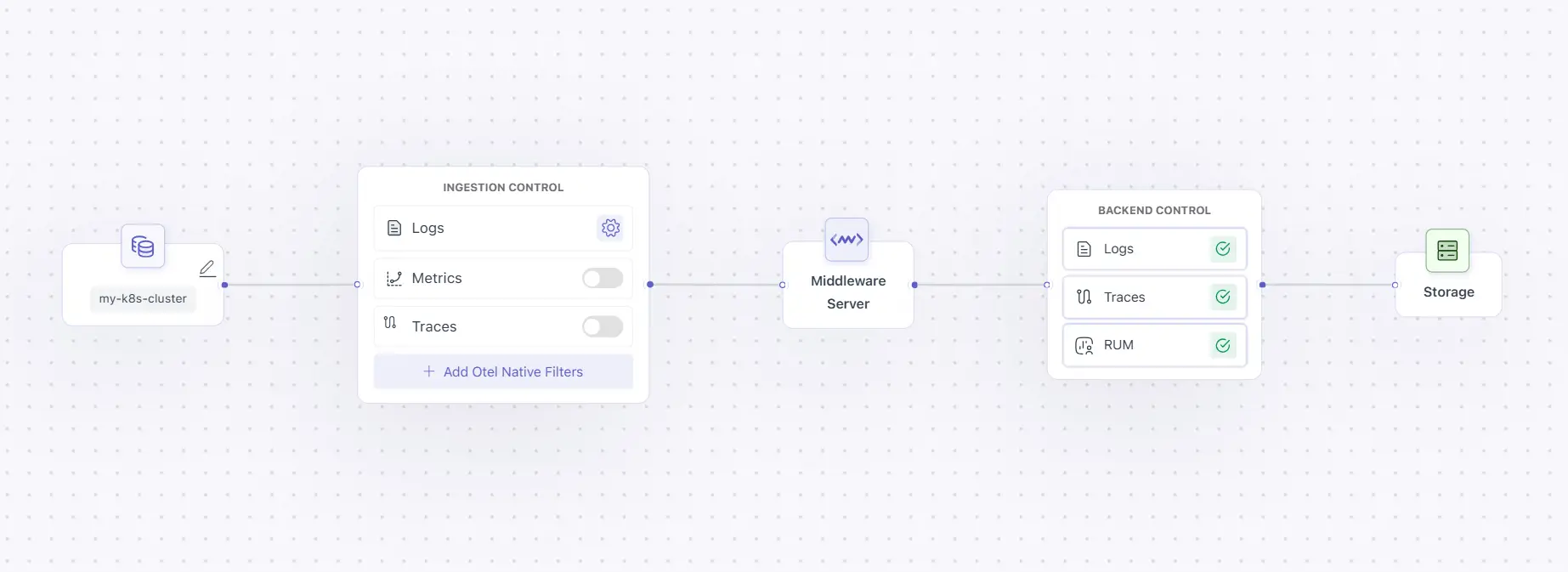

Log Ingestion Control defines how file-based logs are collected, parsed, enriched, and secured before they are sent to Middleware.

Think of this layer as the quality gate for logs: it decides what to read, how to structure it, and what to protect before data moves further in the pipeline.

These controls run at the agent / OpenTelemetry Collector level, so they help reduce noise, storage load, network usage, and compliance risk early in the flow.

The same core capabilities are available for:

- Hosts

- Kubernetes Clusters

Kubernetes also adds cluster-aware selection controls such as namespace, deployment, and pod filters.

Infra Log Monitoring

Infra Log Monitoring controls whether file-based logs are collected at all. This is the first decision point in ingestion: if it is disabled, downstream log path and parsing logic cannot operate.

What happens when it is OFF

When this toggle is disabled:

- No file-based logs are scraped

- Log paths, labels, regex parsing, and multiline parsing are all disabled

- The agent does not read any log files from disk

Use this mode when:

- You only want metrics and traces

- You are relying on integration-based data instead of file logs

What happens when it is ON

When enabled, full log collection configuration becomes available:

| Feature | Purpose |

|---|---|

| Log Paths | Defines which files should be read |

| Labels | Adds searchable metadata to logs |

| Multiline Parsing | Combines multi-line entries into one event |

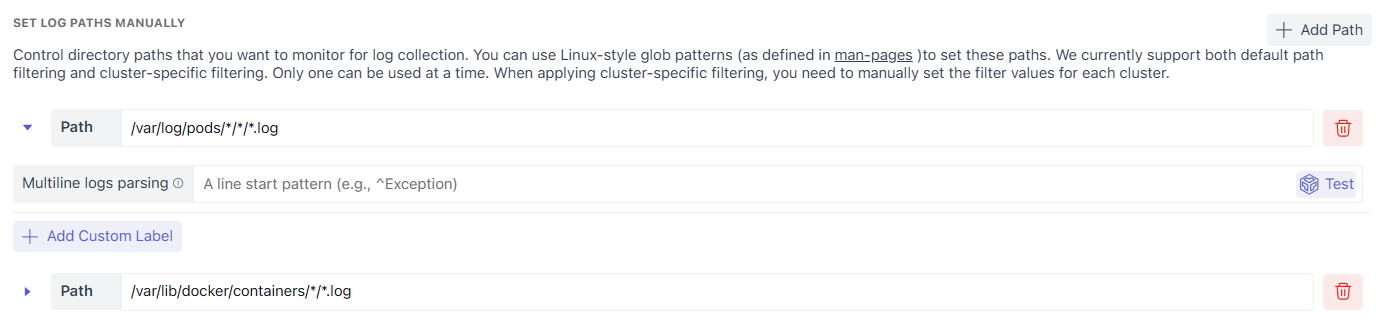

Log Path Configuration

Log path configuration depends on where the agent is running.

The goal is to ensure you collect only relevant logs and avoid broad patterns that bring in unnecessary noise.

| Environment | How logs are selected |

|---|---|

| Hosts | Direct file paths (default path model) |

| Kubernetes Clusters | Default paths, or Namespace / Pod / Deployment filters |

A. Log Paths for Hosts

On host-based agents, logs are collected directly from disk paths. You define Linux-style glob patterns to control exactly which files are ingested.

Example paths

/var/log/**/*.log/home/app/logs/*.log/var/lib/docker/containers/*/*.log

These patterns directly determine your ingestion scope, so tighter paths usually produce cleaner and lower-cost results.

Per-path configuration:

Each path can have independent settings, which is useful when different applications write logs in different formats.

| Feature | Purpose |

|---|---|

| Multiline Parsing | Joins stack traces and split log lines |

| Path Labels | Adds static context for that specific pat |

Example:

| Path | Labels |

|---|---|

/var/log/nginx/*.log | service=nginx |

/var/log/mysql/*.log | service=mysql |

B. Log Selection for Kubernetes Clusters

Kubernetes supports both broad and fine-grained selection approaches.

Default Path Configuration

Applies one shared path configuration to all selected clusters.

Use this when:

- Clusters share a common logging structure

- You want standard behaviour across environments

Cluster-Specific Configuration Lets you override settings for each cluster individually:

- Paths

- Labels

- Multiline parsing

Use this when:

- Clusters differ by team or workload type

- Logging layouts vary between environments

Two collection methods in Cluster-Specific mode

Option 1: Filter via Path

Path-based selection, similar to host mode.

Example:

/var/log/pods/*/*.log

Option 2: Filter via Namespace, Deployments, and Pods

Kubernetes-native selectors for more precise targeting.

For each cluster, you can:

- Include or exclude namespaces

- Include or exclude deployments

- Include or exclude pods

Examples:

- Exclude

kube-systemnamespace - Include only

paymentsdeployment - Exclude noisy debug pods

This method is especially useful when pod-level control is needed without maintaining many path rules.

2. Scrape Attributes from Log Body (Regex Parsing)

Regex parsing extracts fields from raw log body text and converts them into structured attributes.

This is important when logs are plain text but teams still need searchable fields in the UI.

Example log:

[2024-12-18T05:27:45.361245] dev testRegex:

\[(?P<date>.+)\] (?P<log_message>.+)Result:

{

"date": "2024-12-18T05:27:45.361245",

"log_message": "dev test"

}You can then filter directly by:

- date

- log_message

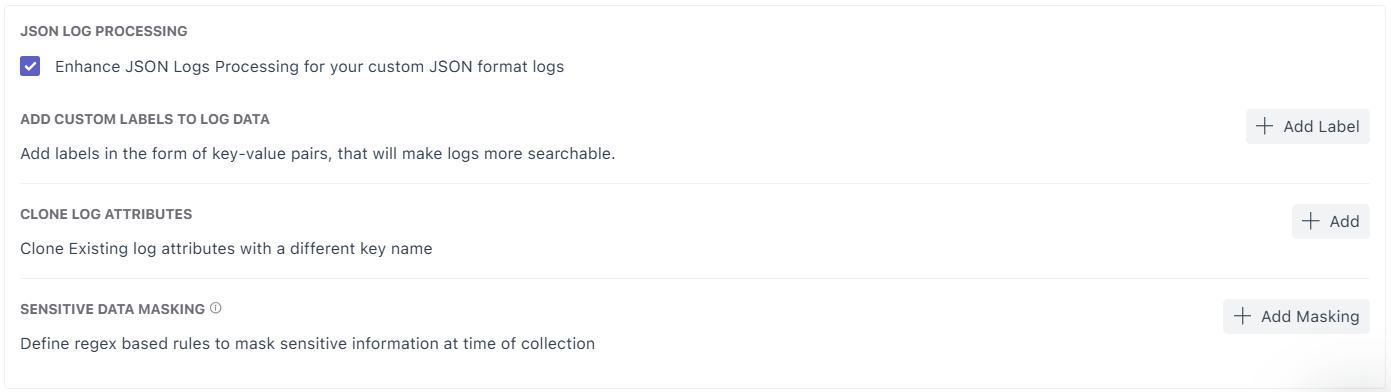

3. JSON Log Processing

Enable JSON Log Processing when logs are JSON formatted or contain nested objects. Middleware auto-parses nested keys so fields become queryable and easier to use in search and dashboards.

Examples:

instant.epochSecondinstant.nanoSecondservice.name

These appear as parsed body attributes in Logs UI.

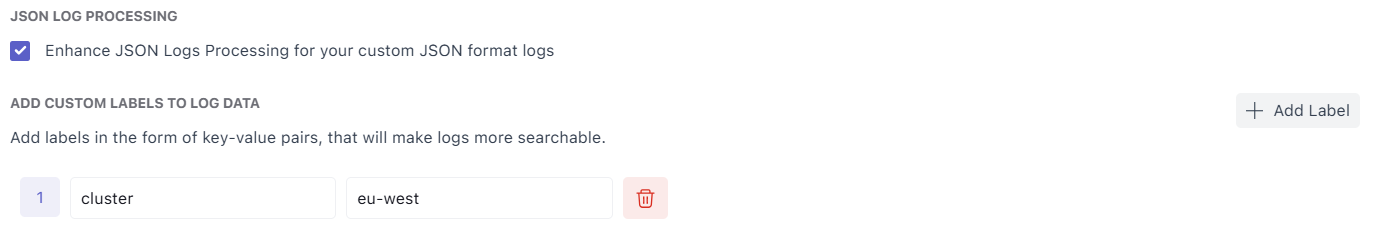

4. Add Custom Labels

Custom labels attach static metadata to logs during ingestion.

They help with ownership, segmentation, and operational filtering at scale.

Example labels:

env = productionteam = paymentscluster = eu-west

Typical benefits:

- better multi-team separation

- cleaner environment filters

- improved cost and ownership tracking

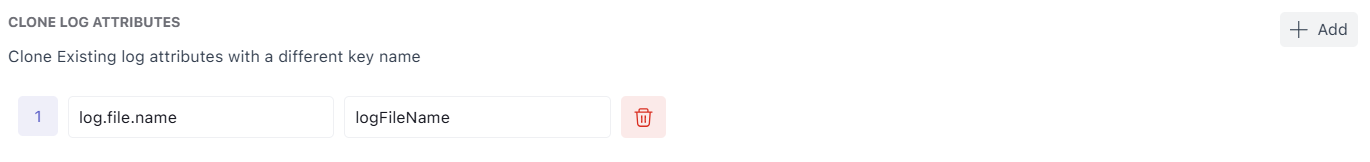

5. Clone Log Attributes

Clone Log Attributes lets you rename fields for consistency across services and log formats.

Example:

log.file.name→logFileName

Use this to standardize naming conventions and reduce query confusion across teams.

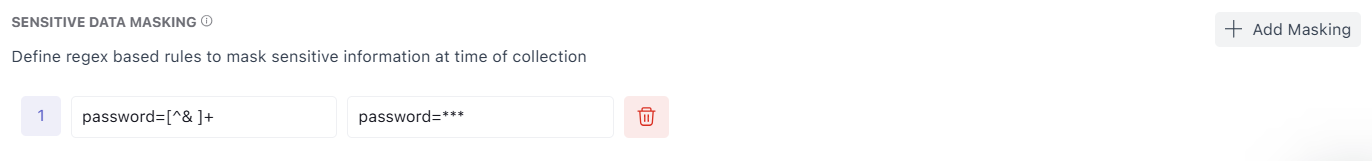

6. Sensitive Data Masking

Sensitive Data Masking applies regex-based replacement before logs leave the machine. This keeps secrets and personal data from being forwarded in readable form.

Example:

- Regex: password=[^& ]+

- Replace: password=***

- Result: password=***

This protects:

- secrets

- tokens

- PII

and strengthens compliance posture early in the telemetry path.

Need assistance or want to learn more about Middleware? Contact our support team at [email protected] or join our Slack channel.